Technological singularity

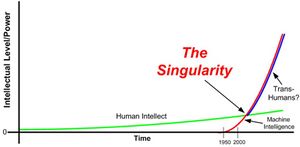

The Singularity (more fully, the “Technological Singularity”) is an envisaged future time period when artificial intelligence becomes more generally capable than humans.

History[править]

As a result of that development, an "intelligence explosion" might follow, as first described in 1964 by I.J. Good:[1]

Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultra-intelligent machine could design even better machines; there would then unquestionably be an "intelligence explosion," and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control. It is curious that this point is made so seldom outside of science fiction. It is sometimes worthwhile to take science fiction seriously.

Vernor Vinge coined the term 'the singularity' in an article in the January 1983 edition of Omni magazine:[2]

We are at the point of accelerating the evolution of intelligence itself... The evolution of human intelligence took millions of years. We will devise an equivalent advance in a fraction of that time. We will soon create intelligences greater than our own. When this happens, human history will have reached a kind of singularity, an intellectual transition as impenetrable as the knotted space-time at the centre of a black hole, and the world will pass far beyond our understanding. This singularity, I believe, already haunts a number of science-fiction writers. It makes realistic extrapolations to an interstellar future impossible.

Since 2005, Ray Kurzweil has been widely credited with popularising the term following his book The Singularity is Near.

Definitions[править]

This notion of "Technological Singularity" is often conflated with some related ideas:[3]

- The transcendence of biology by humans (as featured on the cover of the book The Singularity Is Near)

- A period of time in which development happens exceedingly rapidly

- A period of time beyond which it is impossible to foresee further developments.

There is further scope for confusion from the fact that the Singularity University avoids any focus on the Technological Singularity, but instead focuses on the increasing pace of technological development. If it takes place, the potential outcomes of the Technological Singularity range from extremely good to extremely bad.

Contrasting views on the Singularity[править]

Eliezer Yudkowsky gave the following answers to the question "How does your vision of the Singularity differ from that of Ray Kurzweil?"[4]

- I don't think you can time AI with Moore's Law. AI is a software problem.

- I don't think that humans and machines "merging" is a likely source for the first superhuman intelligences. It took a century after the first cars before we could even begin to put a robotic exoskeleton on a horse, and a real car would still be faster than that.

- I don't expect the first strong AIs to be based on algorithms discovered by way of neuroscience any more than the first airplanes looked like birds.

- I don't think that nano-info-bio "convergence" is probable, inevitable, well-defined, or desirable.

- I think the changes between 1930 and 1970 were bigger than the changes between 1970 and 2010.

- I buy that productivity is currently stagnating in developed countries.

- I think extrapolating a Moore's Law graph of technological progress past the point where you say it predicts smarter-than-human AI is just plain weird. Smarter-than-human AI breaks your graphs.

- Some analysts, such as Illka Tuomi, claim that Moore's Law broke down in the '00s. I don't particularly disbelieve this.

- The only key technological threshold I care about is the one where AI, which is to say AI software, becomes capable of strong self-improvement. We have no graph of progress toward this threshold and no idea where it lies (except that it should not be high above the human level because humans can do computer science), so it can't be timed by a graph, nor known to be near, nor known to be far. (Ignorance implies a wide credibility interval, not being certain that something is far away.)

- I think outcomes are not good by default - I think outcomes can be made good, but this will require hard work that key actors may not have immediate incentives to do. Telling people that we're on a default trajectory to great and wonderful times is false.

- I think that the "Singularity" has become a suitcase word with too many mutually incompatible meanings and details packed into it, and I've stopped using it.

External links[править]

Sources[править]

- What is The Singularity? by Vernor Vinge, 1993

- Three Major Singularity Schools by Eliezer Yudkowsky, 2007

- 17 definitions of the Technological Singularity, collected by Nikola Danaylov

- Superintelligence: Paths, Dangers, Strategies, by Nick Bostrom

- Our Final Invention: Artificial Intelligence and the End of the Human Era, by James Barrat

- The Technological Singularity, by Murray Shanahan

- Surviving AI: The promise and peril of artificial intelligence, by Calum Chace

- Singularity Hypotheses: A Scientific and Philosophical Assessment, edited by Amnon Eden

- The Long-term Future of (Artificial) Intelligence (video) by Stuart Russell

- Superintelligence: Fears, Promises and Potentials, by Ben Goertzel