Existential risk

Перейти к навигации

Перейти к поиску

An existential risk or existential threat is a potential development that could drastically (or even totally) reduce the capabilities of humankind.

Should I worry?[править]

According to the Global Challenges Foundation a typical person could be five times more likely to die in a mass extinction event compared to a car crash.[1] The Global Priorities Project later issued a retraction of the statement.[2]

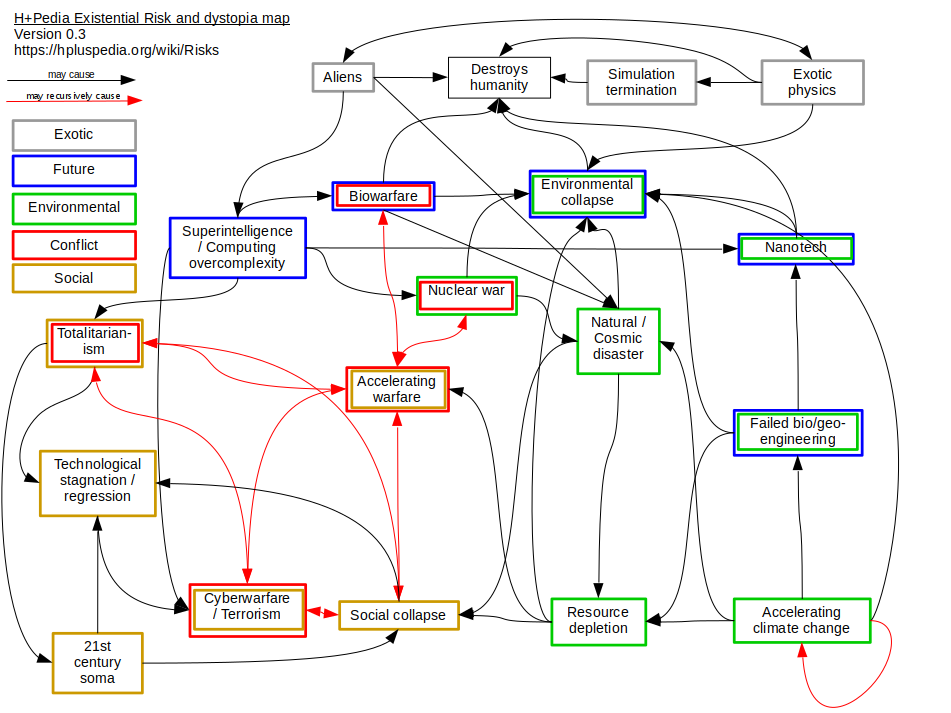

Dystopia and Risk map[править]

Map created by Deku-shrub from the data below.

Key risks and scenarios[править]

The following list of risk encompasses long-term dystopian scenarios in addition to traditionally defined existential risks.

Exotic[править]

- Alien intervention

- Examples: Contact, Independence Day, Knowing[3]

- Causing: Direct destruction of humanity, natural/cosmic disasters or anything a super intelligence can do

- Simulation termination

- Examples: The Matrix

- Causing: Direct destruction of humanity

- Exotic physics

- Examples: Creation of false vacuums

- Causing: Direct destruction, simulation termination or environmental collapse

Conflict[править]

- Nuclear war

- Examples: Anything from a single accidental detonation or rogue state up to collapse of MAD and muti-national warfare

- Causing: Accelerating warfare or environmental collapse

- Biological warfare or another catastrophic natural super virus

- Examples: National state attacks through to a 28 days later style ultra-transmissable and deadly plague

- Causing: destroying humanity, accelerating warfare, natural disasters or environmental collapse

- Cyberwarfare, terrorism, rogue states

Environmental[править]

- Accelerating climate change

- Examples: This happened now :(

- Causing: Further natural disasters, depletion of the world's resources or driving dangerous bio or geo engineering projects

- Errant geo-engineering or GMOs

- Examples: Solar radiation management, carbon dioxide removal projects

- Causing: Depletion of resources, further environmental disasters, nano-pollution or environmental collapse

- Natural disasters or Cosmic disasters

- Examples: Asteroid strike, deadly cosmic radiation, ice shelf collapse, bee extinction, crop plagues, ice age. Could even include the Sun's supernova, proton decay and final heat death of the universe.

- Causing: Resource depletion, social or environmental collapse

- Resource depletion

- Examples: Fossil fuels, clean water, serious air quality impact, rare earths, localised overpopulation

- Causing - Social or environmental collapse

- Nanotechnology

- Examples: Gray goo

- Causes: Destruction of humanity, natural disasters or environmental collapse

Future computing and intelligence[править]

- Errant AI, posthuman take over or computing overcomplexity

- Examples: Unfriendly artificial general intelligence, unfriendly elite transhumans (e.g. Khan from Star Trek)[8] or unstable interconnected computing (e.g the events of Dune: The Butlerian Jihad)[9]

- Causing: Accelerating warfare, societal collapse, totalitarianism

Social[править]

- Mega-autocractic / Neo-fascist or other totalitarian dystopias

- Examples: 1984, Gattaca, Star Trek etc

- Causing: Social and technological stagnation or 21st century soma

- 21st century soma

- Examples: Brave New World, Idiocracy

- Causing: technological stagnation or social collapse

- Social collapse

- Examples: Max Max, 28 Days Later etc

- Causing: Accelerating warfare, cyberwarfare/terrorism, technological stagnation, autocracy

Attitudes[править]

Stances that can be adopted towards existential risks include:

- Inevitabilism - the view that, for example, "the victory of transhumanism is inevitable"

- Precautionary - emphasising the downsides of action in areas of relative ignorance

- Proactionary - emphasising the dangers of inaction in areas of relative ignorance

- Techno-optimism - the view that technological development is likely to find good solutions to all existential risks

- Techno-progressive - the view that existential risks must be actively studied and managedШаблон:Disputed

Things that are not existential risks[править]

- Declining sperm count in men whilst problematic for fertility, will not spell the end of humanity[10]

- Increased sexual liberal attitudes will not lead to a Sodom and Gomorrah[11] scenario

- The coming of a religious apocalypse or end times are unlikely

- Sex robots, no matter what Futurama says[12]

- Overpopulation caused by life extension will not lead to Soylent Green type scenarios, rather potentially cause localised instabilities and resource depletion[13]

See also[править]

- Dystopian transhumanism

- Existential opportunities

- Future of Humanity Institute

- Lifeboat Foundation

- Suffering risk

External links[править]

Global catastrophic risk on Wikipedia

Global catastrophic risk on Wikipedia- Typology of human extinction risks by Alexey Turchin on the Immortality Roadmap

- Nick Bostrom's 2002 existential risk paper

- http://www.existential-risk.org/

/r/ExistentialRisk on Reddit

/r/ExistentialRisk on Reddit

References[править]

- ↑ Human Extinction Isn't That Unlikely

- ↑ Errata to Global Catastrophic Risks 2016

- ↑ https://en.wikipedia.org/wiki/Knowing_(film)

- ↑ https://en.wikipedia.org/wiki/WannaCry_ransomware_attack

- ↑ e.g. Russian interference in the 2016 United States elections

- ↑ e.g. spread of Islamism

- ↑ e.g. Theresa May's mass surveillance programmes

- ↑ https://en.wikipedia.org/wiki/Khan_Noonien_Singh

- ↑ https://en.wikipedia.org/wiki/Dune:_The_Butlerian_Jihad

- ↑ http://www.bbc.co.uk/news/health-40719743

- ↑ https://en.wikipedia.org/wiki/Sodom_and_Gomorrah

- ↑ https://theinfosphere.org/Robosexuality

- ↑ Deconstructing overpopulation for life extensionists